Debunking the Security/AppSec in AI-generated Code

There's a lot of AI-generated code out there right now. After various discussions that are had on various forums and in person, it's clear that AI is helping with a lot of the low-hanging fruit of code generation at the moment. A great example of this is tests (i.e; unit tests, mock tests, integration tests).

Truth be told, it's a great thing. Algorithms and critical thinking can now be "put on paper" faster than ever before.

The question is: how secure is it? In this blog post, you'll find out the answer.

Prerequisites

If you'd like to follow along with this blog post from a hands-on perspective, you'll need:

- An LLM tool like Cursor or Claude Code for prompting the AI-generated project.

- Snyk, which you can use for free (the CLI.

If you don't want to follow along from a hands-on perspective, that's totally fine as the knowledge within this blog post will help with any project that has AI-generated code and needs to be scanned for security vulnerabilities and potential exploits.

What Is AI-Generated Code

When you see anyone talk about AI-generated code, it's the process of using an AI tool like Claude Code, Cursor, GitHub Copilot, Goose, or many others, and prompting it to write a specific piece of code for them. It could be a few lines of automation code, some Infrastructure-as-Code, or a full-blown microservice.

The code that gets generated comes from the LLM you're using (Gemini, Claude Sonnet, ChatGPT, etc.).

The Security Risks

When it comes to well-known security risks within AI-generated code, it actually more or less has the same amount of security risks as human-written code will have.

- SQL injection

- XSS

- Insecure dependencies

- Old versions of libraries

The list goes on and on.

The major problem from a security perspective is how the Model you're using was trained. Remember, these Models are using data sets that are being trained on public data. That means any wrong answer to a question on a forum (an answer with code that has a vulnerability) is what the Model is being trained on. It doesn't know right or wrong in terms of code not looking secure.

Another problem is business-specific logic going into the creation of the code. For example, your organization may specialize and focus on Django, a popular web framework in Python. If you use an LLM to generate a new web API project and you don't specify what framework you want to use, it could default to FastAPI or Flask. This doesn't seem like a security issue right off the bat, but if the business logic is not valid, that could lead to vulnerabilities.

Test 1: Without Specifying Security Measures

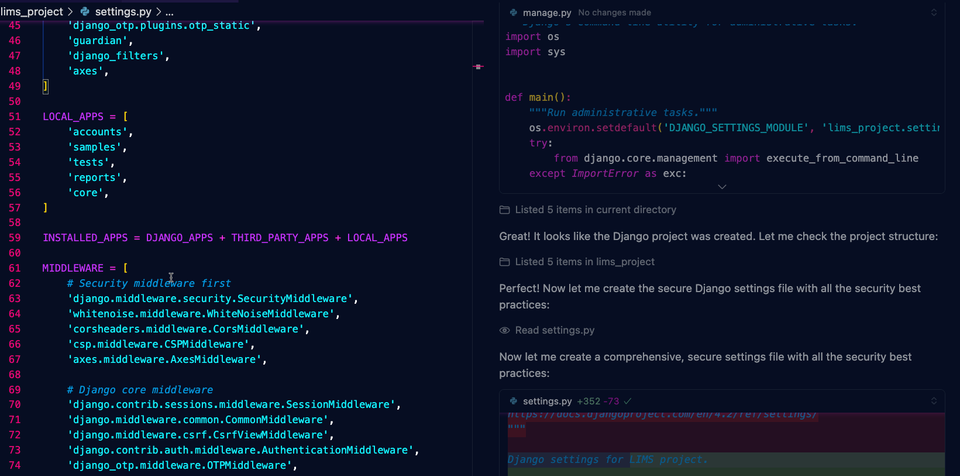

Within test 1, you'll see the prompt below includes the creation of a Django-based application for LIMS, which is a laboratory software.

Take the prompt below and run it in your LLM tool.

I want to create a Django application (Python framework).

This application will be to create an MVP of a Laboratory Information Management System (LIMS)

Here are a few details about the application:

1. Sample tracking: Registering, labeling, and tracking the movement of samples within the lab.

2. Test management: Defining tests, associating them with samples, and recording results.

3. Data entry and storage: Creating forms for data input and storing data in a database (e.g., PostgreSQL).

4. User authentication and authorization: Managing user access and permissions.

Reporting: Generating reports on sample status, test results, and other lab data. Go through the process of saying "yes" to what the Model generates.

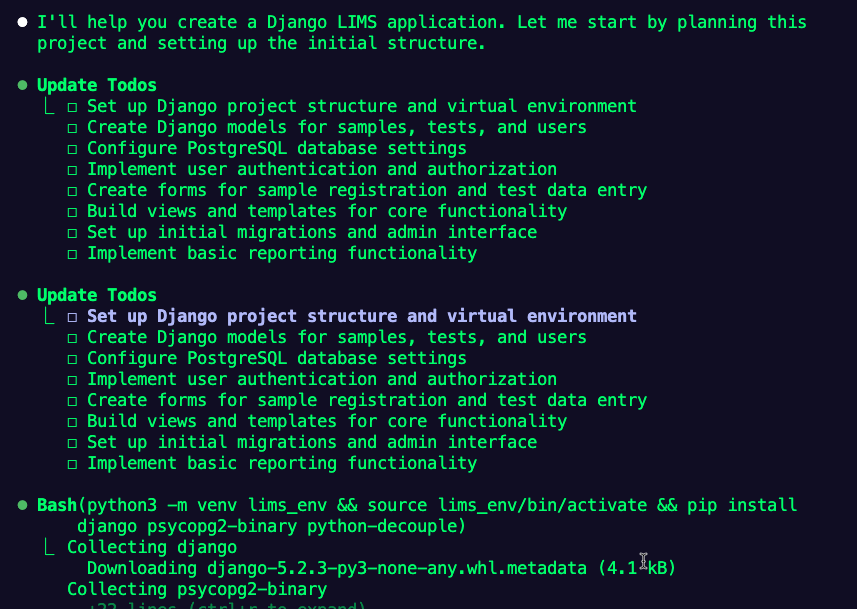

Once everything is generated, you should see an output of directories similar to the one below.

Now that the code is generated, run the test against the code.

snyk code testYou may see an output of vulnerabilities similar to the below:

✗ [Low] Use of Hardcoded Credentials

Path: lims/management/commands/populate_sample_data.py, line 125

Info: Do not hardcode credentials in code. Found hardcoded credential used in username.

✗ [High] Hardcoded Secret

Path: lims_project/settings.py, line 23

Info: Avoid hardcoding values that are meant to be secret. Found a hardcoded string used in here.

✔ Test completed

Organization: asoftware417

Test type: Static code analysis

Project path: /Users/michael/super-sick-app

Summary:

2 Code issues found

1 [High] 1 [Low] As you can see, Copilot is combined with Claude Sonnet 4 hard-coded secrets. This is, of course, something that would fail any compliance test.

Test 2: Specifying The Code To Be "Secure"

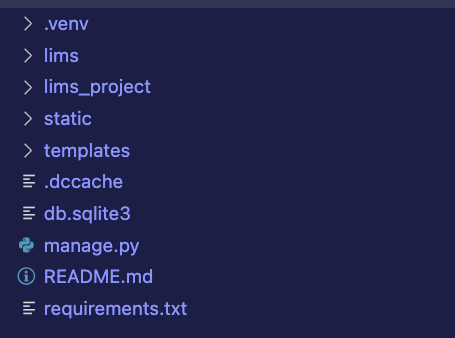

In this section, you'll run the same prompt, except specify that you want the code to be secure by following best practices and the test will be against the dependencies instead of the code itself.

Notice the last paragraph in the prompt below specifying to be as "secure as possible".

Delete the code that was generated in the previous section and run the prompt below.

I want to create a Django application (Python framework).

This application will be to create an MVP of a Laboratory Information Management System (LIMS

Here are a few details about the application:

1. Sample tracking: Registering, labeling, and tracking the movement of samples within the lab.

2. Test management: Defining tests, associating them with samples, and recording results.

3. Data entry and storage: Creating forms for data input and storing data in a database (e.g., PostgreSQL).

4. User authentication and authorization: Managing user access and permissions.

Reporting: Generating reports on sample status, test results, and other lab data.

Make this application as secure as possible following best practices for Python, Django, and overall AppSec. Check out the latest CVEs, look into reports like CIS, and confirm you aren't building any code with known exploits from MITTRE.Once the code is generated, run the following command, which scans the dependencies.

snyk testBelow are the results of the test.

Tested 64 dependencies for known issues, found 21 issues, 41 vulnerable paths.As you can see, there are quite a few critical vulnerabilities.

Conclusion

Out of the box, AI-generated code is not secure. Truth be told, a lot of human-written code is not secure out of the box either. However, as humans, we get to think "we should write this code with a security-first mindset", which means we're going to take things into consideration like using the latest version of a framework, not storing passwords in the code, and making other logical decisions to ensure that the code we write at least has no known exploits.

Comments ()