Configuring an AWS EKS Management Cluster

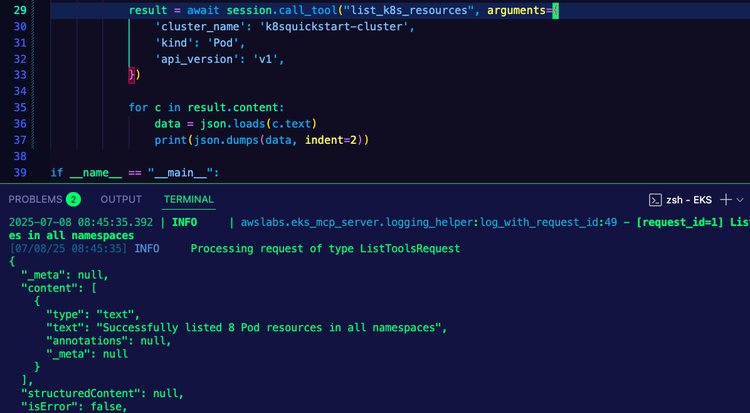

Working with and interacting with Kubernetes is very particular in the sense of how workloads are deployed and how engineers manage them. With the uniqueness may come the need to have one cluster that has the ability to manage certain aspects of other clusters. This helps with having a central way to manage particular workloads without having to manage all workloads in a "snowflake" fashion.

In this blog post, you'll learn about the concept of setting up a management cluster for Kubernetes.

What's A Management Cluster

How engineers and applications interact with, deploy to, and manage Kubernetes is very different in terms of other platforms like SaaS, PaaS, and Virtual Machines (VM). Within Kubernetes, everything is being interacted with via an API and most of the time the APIs are being interacted with via a Kubernetes Manifest.

For example, a k8s manifest has everything from what the application stack will look like to what databases it may be interacting with to secrets that are being retrieved and everything in-between.

Chances are, an organization is going to have multiple Kubernetes clusters and those Kubernetes clusters need to be managed in a particular way due to how application stacks and integrations are handled. If clusters aren't managed with any type of central-based tools, that means every Kubernetes cluster will be unique in its own way and tools/workflows will have to repeatedly get deployed to said clusters one after one. In doing so, environments like the ability to be modular and its all repetitive work, which some may see as not staying DRY (Don't Repeat Yourself).

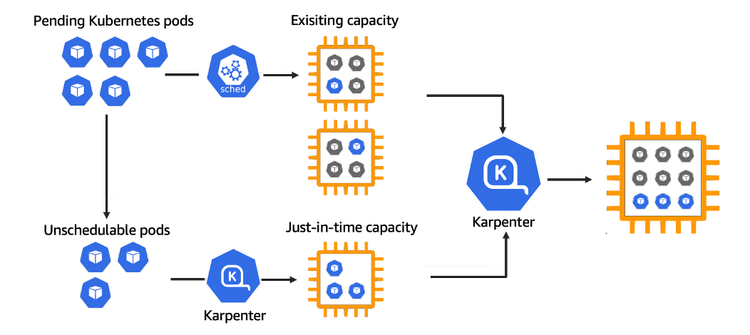

Management clusters aim to solve this issue by giving a Control Plane of sorts. It's like the brains of the operations for specific workloads. You send workloads and configurations to the management cluster (not the k8s Control Plane, but the management cluster) and the management cluster then sends those configurations out to the appropriate Kubernetes clusters that it has access to. This allows for configurations to be worked on in once place and sent to multiple clusters vs having to push each configuration to every single cluster.

When Management Clusters Don't Work

Not every tool/third-party service will work with a management cluster. If there isn't a concept of the Control Plane for a tool or multi-cluster management, the tool won't have the ability to manage other clusters for its particular use case and therefore wouldn't be a good fit.

You'll learn about a few tools that fit the bill for a management cluster in the next section.

What Tools To Add

Now that you know what a management cluster is and why you'd want to use it, the next important question is to think about what tools you want on the management cluster. Remember, the management cluster is like the brain of the operation, so the goal of it is to implement tools that need some type of Control Plane (not the k8s Control Plane).

For example, a good tool that should be installed on a management cluster is ArgoCD. With ArgoCD, you have a server that's running Argo (the management cluster) and then you can register other Kubernetes clusters to that instance of ArgoCD. That way, you aren't running new instances of ArgoCD in every cluster as that would be a major overhead and no true centralized location for the configurations.

Which tools you should use really depends on what you're running and how you're running it. However, below are a few examples of common tools that may see on a management cluster.

- GitOps: ArgoCD

- Authentication and Authorization: If you're running your own AuthN/Z services that are containerized for OIDC.

- Service Mesh: Gloo Mesh and Istio have the concept of a Control Plane. Agents run on Kubernetes clusters and if you want one of those clusters to receive a particular Service Mesh configuration, it would run through the Service Mesh Control Plane and get sent to the agent.

- Secrets: Some organizations run Secret Managers like HashiCorp Vault on a central Kubernetes cluster and have other clusters connect to it to retrieve secrets.

- Cluster Management: Tools like Rancher may be deployed to one cluster (the management cluster) and the other clusters within the organization can register to Rancher (running in the management cluster). That way, each cluster doesn't have to run an instance of Rancher.

Configuring A Management Cluster

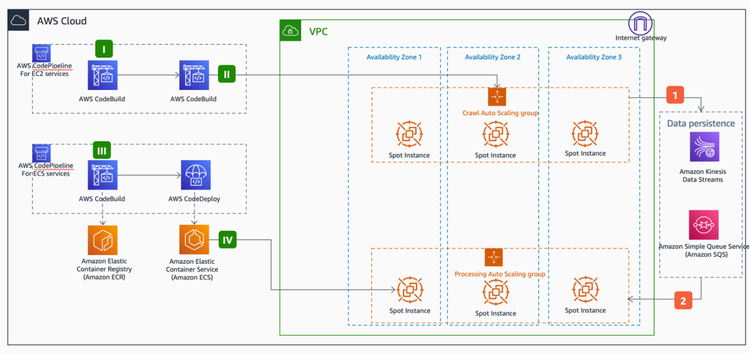

With a list of potential tools that you may deploy to a management cluster, the question then becomes "How am I going to get the tools onto the management cluster?". The answer typically falls under the realm of CICD. With a pipeline, you can connect to the management cluster and deploy the tools in a repeatable fashion instead of having to manually install all of the tools on the management cluster. This also helps when you have to redeploy or create a new management cluster - you'll have an automated way to get the tools back.

Because ArgoCD is one of the primary tools that needs to be on a management cluster, let's use it as an example of what to deploy to a management cluster.

For the purposes of the configuration, this blog post will use:

- EKS

- GitHub Actions

However, the same process can be used on any CICD tool and Kubernetes cluster.

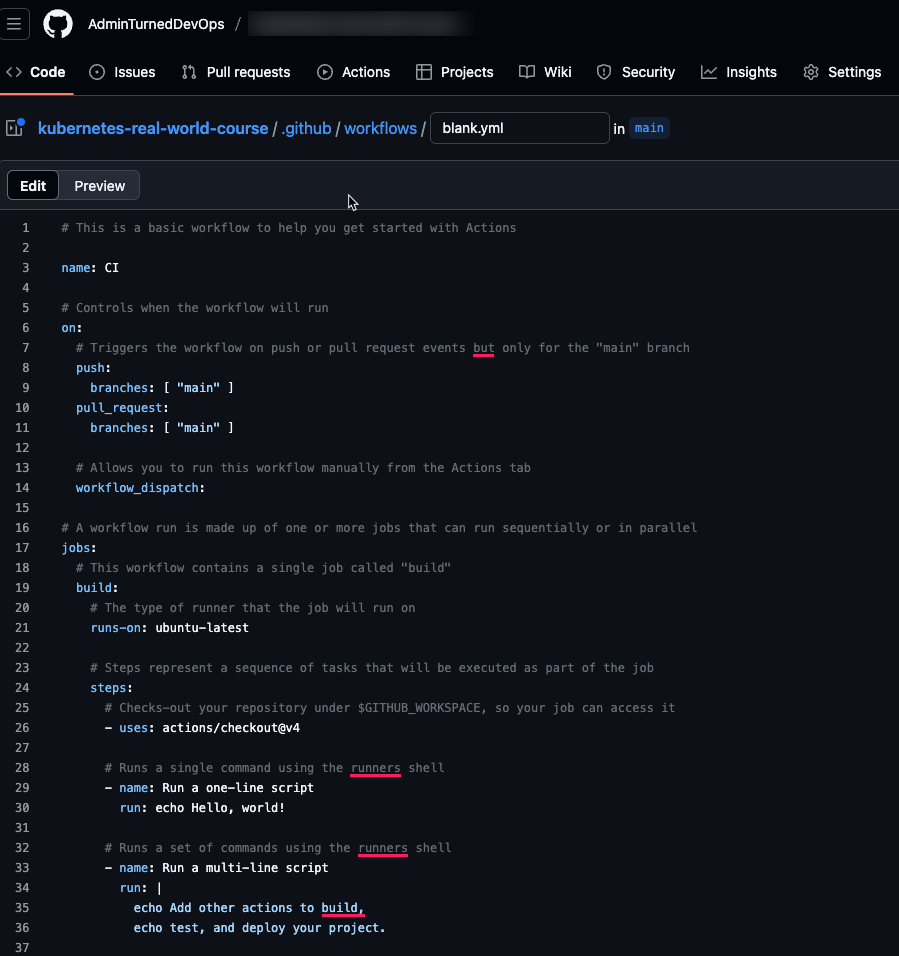

Before starting, create a new GitHub Actions workflow file. You can remove the defaults that are in the workflow file.

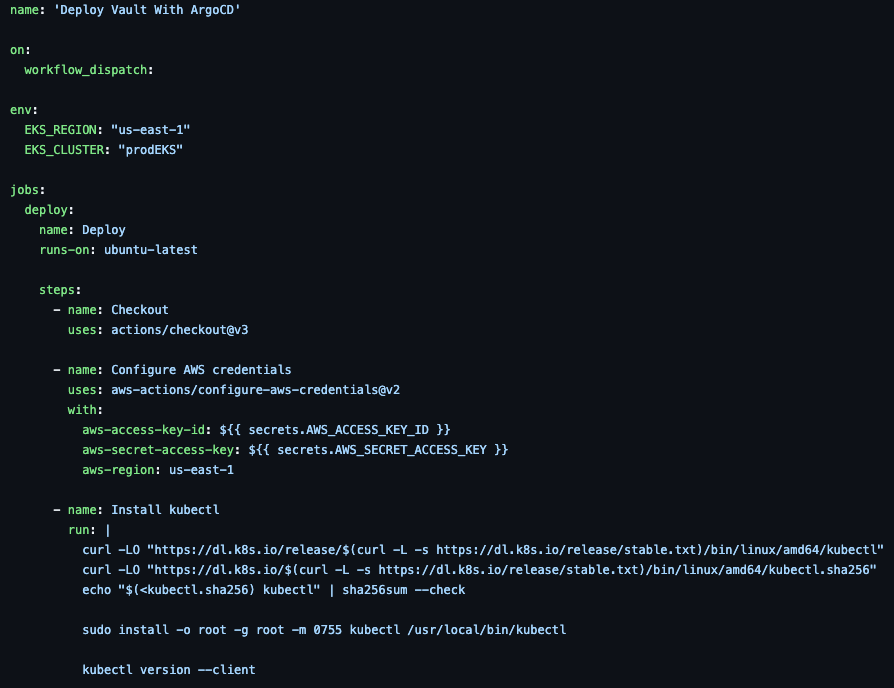

- First, set up a name for the pipeline and use the

workflow_dispatchflag to ensure the pipeline doesn't automatically kick off when code is pushed to the repo.

name: 'Deploy ArgoCD To EKS'

on:

workflow_dispatch:- Specify a few environment variables like the cluster name and region.

env:

EKS_REGION: "us-east-1"

EKS_CLUSTER: "prodEKS"- Configure the GitHub Action to use Ubuntu as the Runner/container that runs the pipeline.

jobs:

deploy:

name: Deploy

runs-on: ubuntu-latest- Use the

Checkoutstep to pull the code from the repo into the GitHub Action Runner.

steps:

- name: Checkout

uses: actions/checkout@v3- The first step is to configure credentials within the pipeline. These credentials will have the ability to authentication to your cluster and have appropriate permissions/RBAC to deploy workloads.

You'll need to set up the secrets in your repo. The majority of CICD tools have a way to configure secrets/variables.

- name: Configure AWS credentials

uses: aws-actions/configure-aws-credentials@v2

with:

aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }}

aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

aws-region: us-east-1- The second step in the pipeline is to install Helm as Helm, the package manager, will be used to install ArgoCD.

- name: Install Helm

run: |

curl -fsSL -o get_helm.sh https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3

chmod 700 get_helm.sh

./get_helm.sh- The third step is to authenticate to your cluster so the Kubeconfig gets pulled down on the GitHub Action Runner. That way, the pipeline can connect to the cluster to install ArgoCD.

Please note that this step will be different based on the Kubernetes cluster you're deploying to. For example, if you're deploying to Azure Kubernetes Service (AKS), you'll need a step for that instead of a step for EKS.

- name: Check AWS version

run: |

aws --version

aws configure set aws_access_key_id $AWS_ACCESS_KEY_ID

aws configure set aws_secret_access_key $AWS_SECRET_ACCESS_KEY

aws configure set region $EKS_REGION

aws sts get-caller-identity

- name: Connect to EKS cluster

run: aws eks --region $EKS_REGION update-kubeconfig --name $EKS_CLUSTER- The final step is to use Helm to install ArgoCD.

- name: Helm Install ArgoCD Repo

shell: bash

run: helm repo add argo https://argoproj.github.io/argo-helm

- name: Helm Install ArgoCD

shell: bash

run: helm install argocd -n argocd argo/argo-cd --set redis-ha.enabled=true --set controller.replicas=1 --set server.autoscaling.enabled=true --set server.autoscaling.minReplicas=2 --set repoServer.autoscaling.enabled=true --set repoServer.autoscaling.minReplicas=2 --set applicationSet.replicaCount=2 --set server.service.type=LoadBalancer --create-namespace

# Verify deployment

- name: Verify the deployment

run: helm list -n argocdAltogether, the pipeline should look like the below.

name: 'Deploy ArgoCD To EKS'

on:

workflow_dispatch:

env:

EKS_REGION: "us-east-1"

EKS_CLUSTER: "prodEKS"

jobs:

deploy:

name: Deploy

runs-on: ubuntu-latest

steps:

- name: Checkout

uses: actions/checkout@v3

- name: Configure AWS credentials

uses: aws-actions/configure-aws-credentials@v2

with:

aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }}

aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

aws-region: us-east-1

- name: Install Helm

run: |

curl -fsSL -o get_helm.sh https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3

chmod 700 get_helm.sh

./get_helm.sh

- name: Check AWS version

run: |

aws --version

aws configure set aws_access_key_id $AWS_ACCESS_KEY_ID

aws configure set aws_secret_access_key $AWS_SECRET_ACCESS_KEY

aws configure set region $EKS_REGION

aws sts get-caller-identity

- name: Connect to EKS cluster

run: aws eks --region $EKS_REGION update-kubeconfig --name $EKS_CLUSTER

- name: Helm Install ArgoCD Repo

shell: bash

run: helm repo add argo https://argoproj.github.io/argo-helm

- name: Helm Install ArgoCD

shell: bash

run: helm install argocd -n argocd argo/argo-cd --set redis-ha.enabled=true --set controller.replicas=1 --set server.autoscaling.enabled=true --set server.autoscaling.minReplicas=2 --set repoServer.autoscaling.enabled=true --set repoServer.autoscaling.minReplicas=2 --set applicationSet.replicaCount=2 --set server.service.type=LoadBalancer --create-namespace

# Verify deployment

- name: Verify the deployment

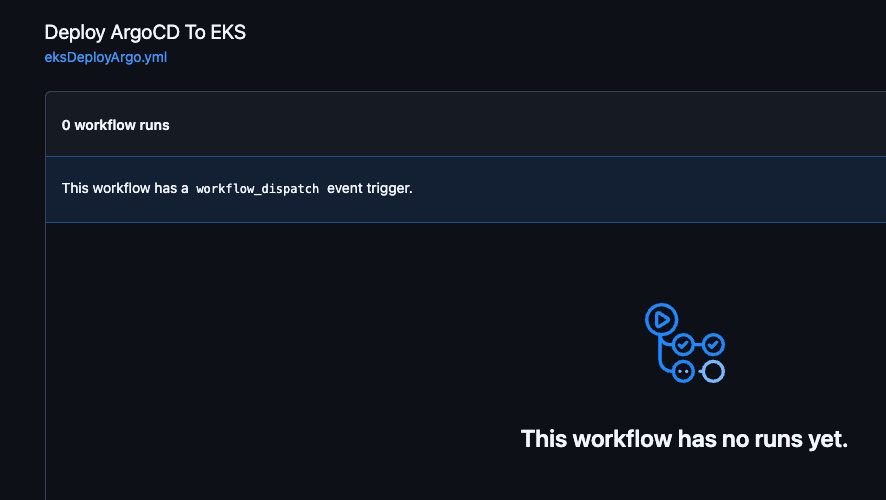

run: helm list -n argocdYou should now see the pipeline within GitHub Actions.

Comments ()