kagent + Claude + k8s: Your Private Agentic Troubleshooter

Agents and Agentic Infrastructure give engineers the ability to have a 24/7/365 engineering helper (with the right implementation of course). The current perdiciment is when using public/cloud-based LLMs (Claude, GPT, etc.), you don't have them deployed in your cluster helping you figure out what is going wrong.

That's where kagent comes into play. With the combination of kagent and Claude, you have an agentic LLM helper within your Kubernetes environment.

In this blog post, we go over how to configure kagent and troubleshoot your environment.

Prerequisites

To follow along with this blog post from a hands-on perspective, you should have the following:

- A Kubernetes cluster up and running.

- Helm installed

- An Anthropic API key

If you don't have a Kubernetes cluster, that's totally fine! You can still follow along from a theoretical perspective and use the hands-on piece at a later time.

Configuring kagent

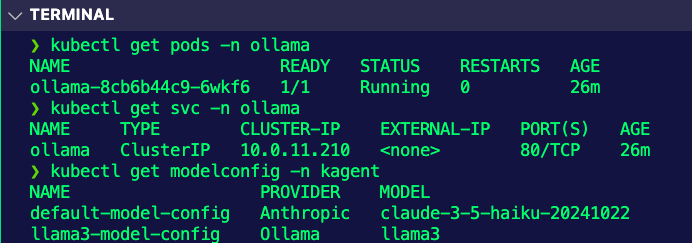

In this section, you'll learn how to deploy the open-source version of kagent.

- Install the CRDs via help to your Kubernetes cluster.

helm install kagent-crds oci://ghcr.io/kagent-dev/kagent/helm/kagent-crds \

--namespace kagent \

--create-namespace- Save your Anthropic API key in an environment variable.

export ANTHROPIC_API_KEY=your_api_key- Install kagent with Claude as the default LLM.

helm upgrade --install kagent oci://ghcr.io/kagent-dev/kagent/helm/kagent \

--namespace kagent \

--set providers.default=anthropic \

--set providers.anthropic.apiKey=$ANTHROPIC_API_KEY- Access the kagent dashboard by port-forwarding the UI service and reaching it via a browser.

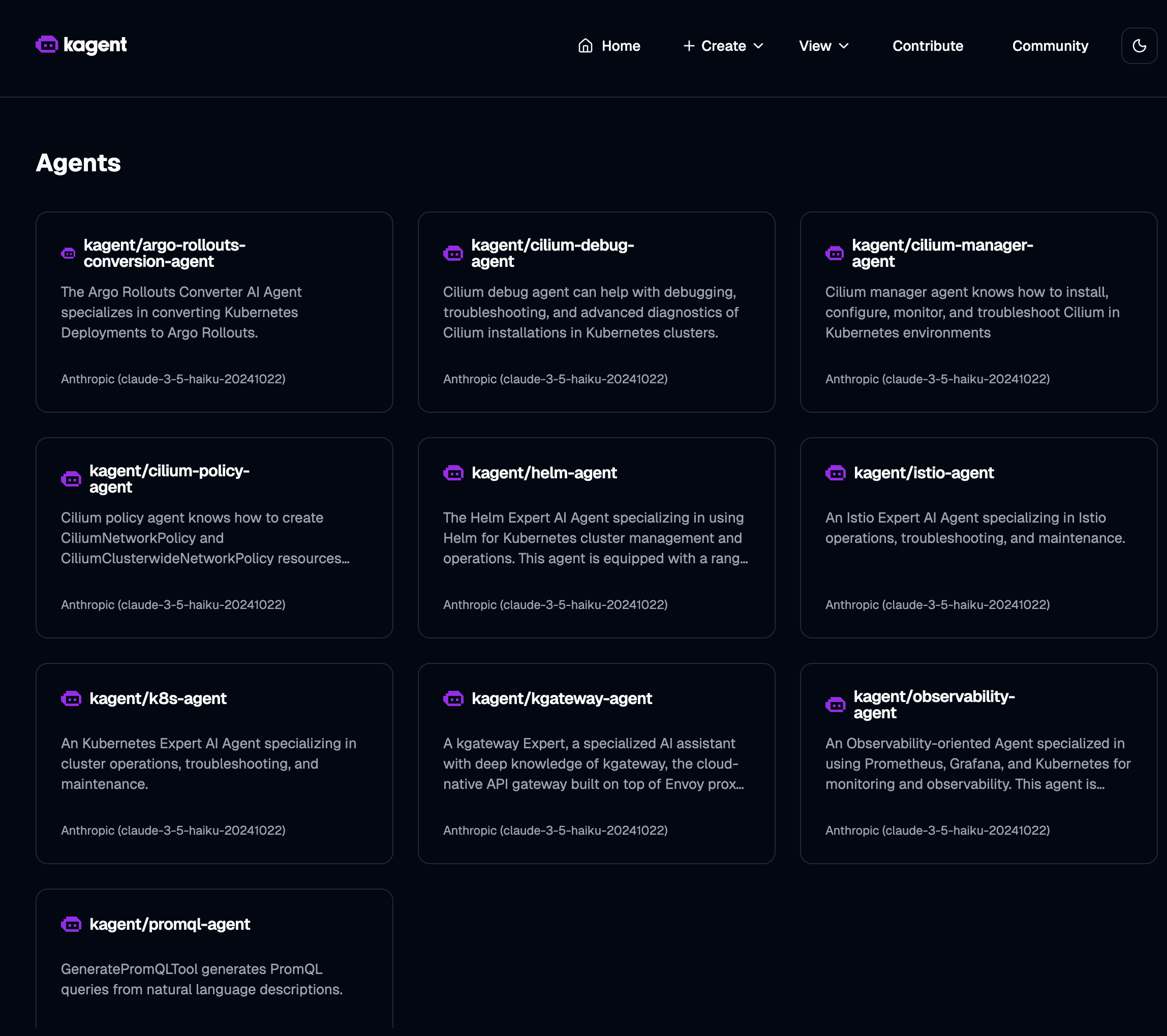

kubectl port-forward svc/kagent-ui -n kagent 8080:8080You should see a screen similar to the below:

Using Your Agent As An SRE Agent

With kagent running a Claude Model in Kubernetes, it's time to put it to the test. One of the implementations that are appealing to engineers in the cloud-native space is a Kubernetes troubleshooter or an "SRE Agent" of sorts. To test out the ability to use kagent and Claude as your 24/7/365 troubleshooter helper, let's deploy a failing Pod.

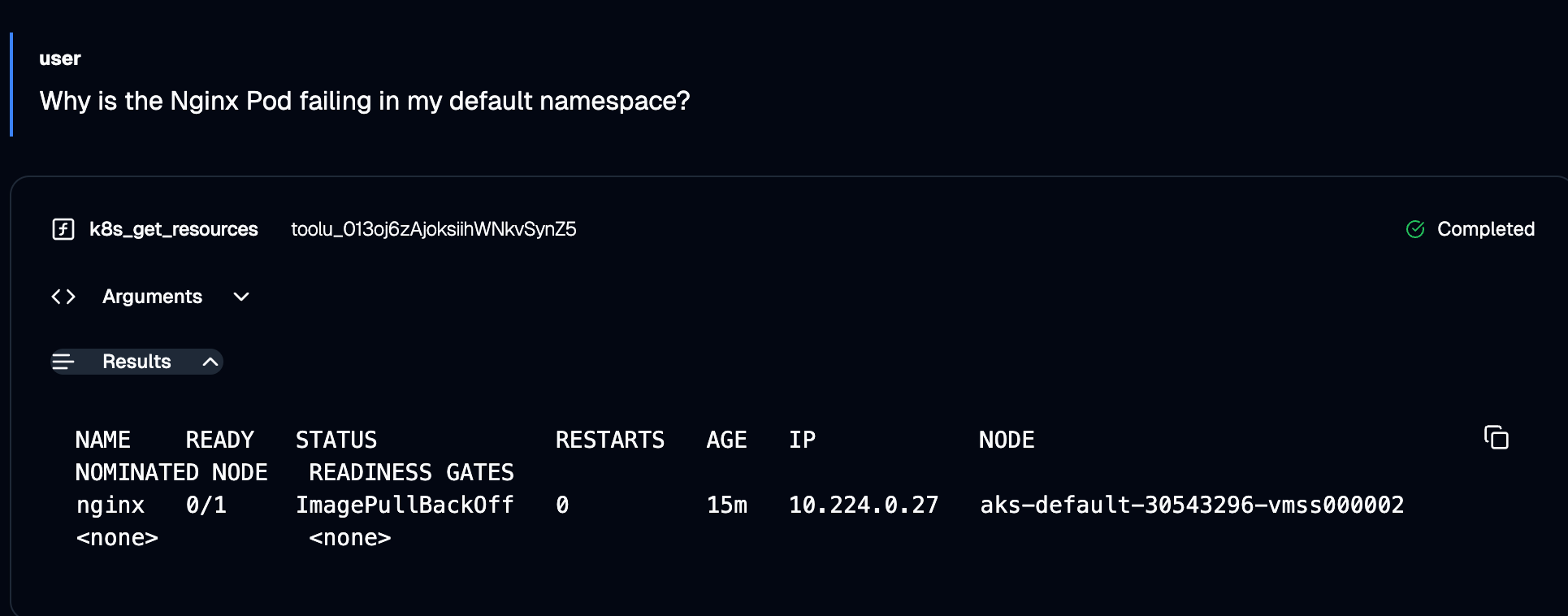

The configuration below deploys an Nginx Pod, but notice how the image name is wrong. Instead of the tag being latest, it's latesttt, which means the deployment will fail.

Deploy the below Manifest. It will fail, but that is on purpose. You'll see an ErrImg error.

kubectl apply -f - <<EOF

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- name: nginx

image: nginx:latesttt

ports:

- containerPort: 80

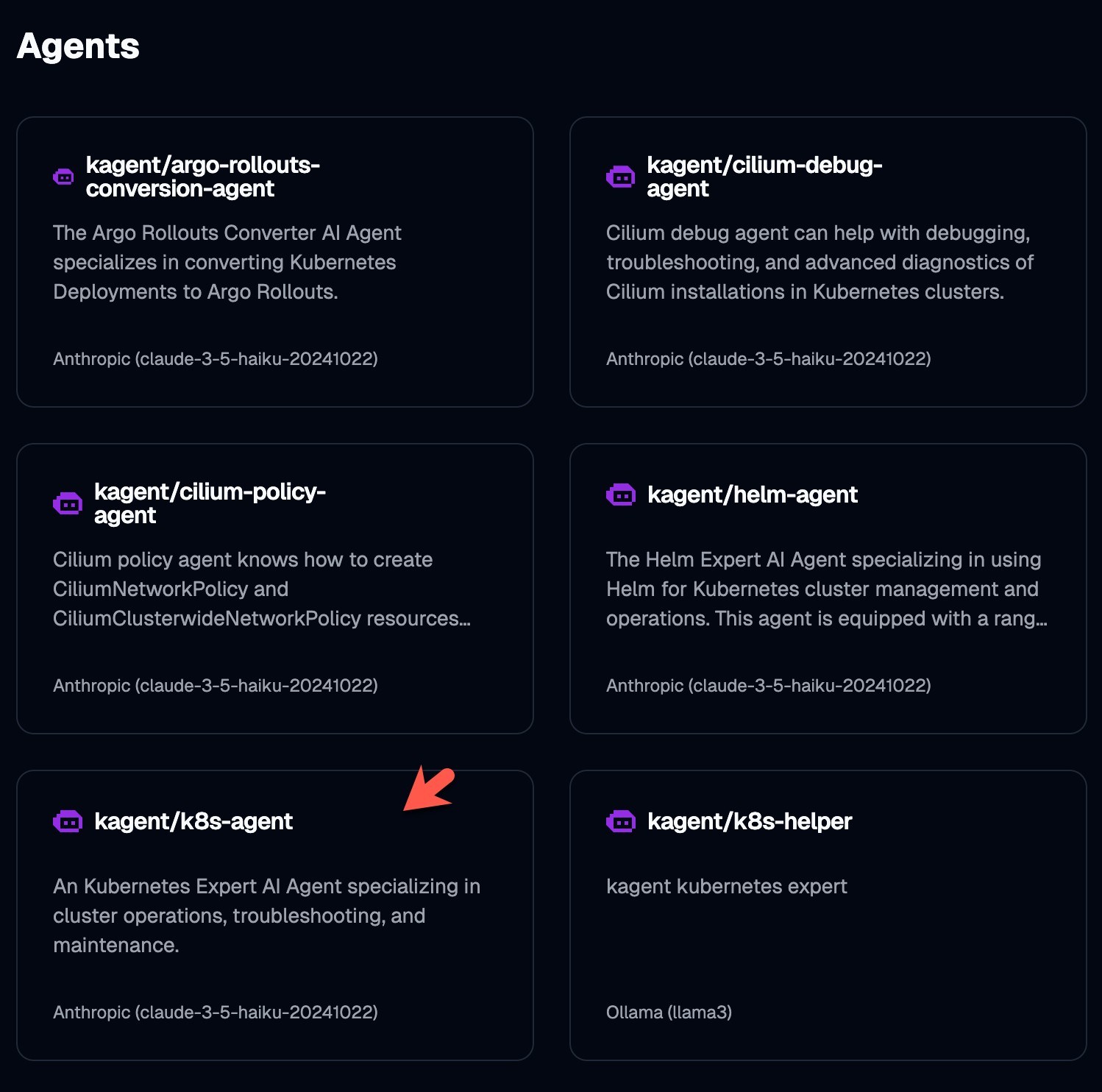

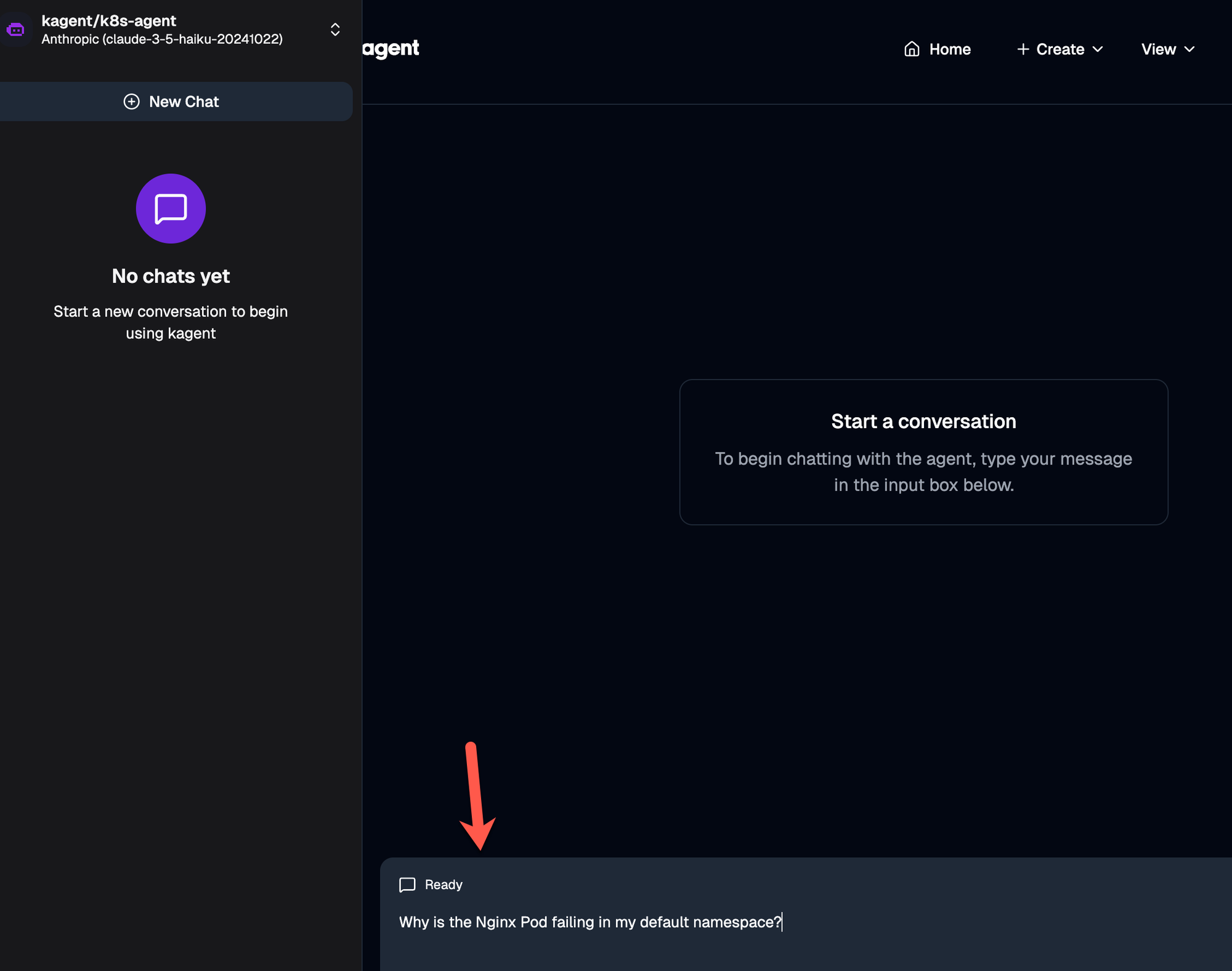

EOF- Open up kagent and go to the pre-built

k8s-agentAgent.

- Prompt the Agent by saying

Why is the Nginx Pod failing in my default namespace?

- You'll notice that kagent goes through several steps to not only debug the issue, but fix it.

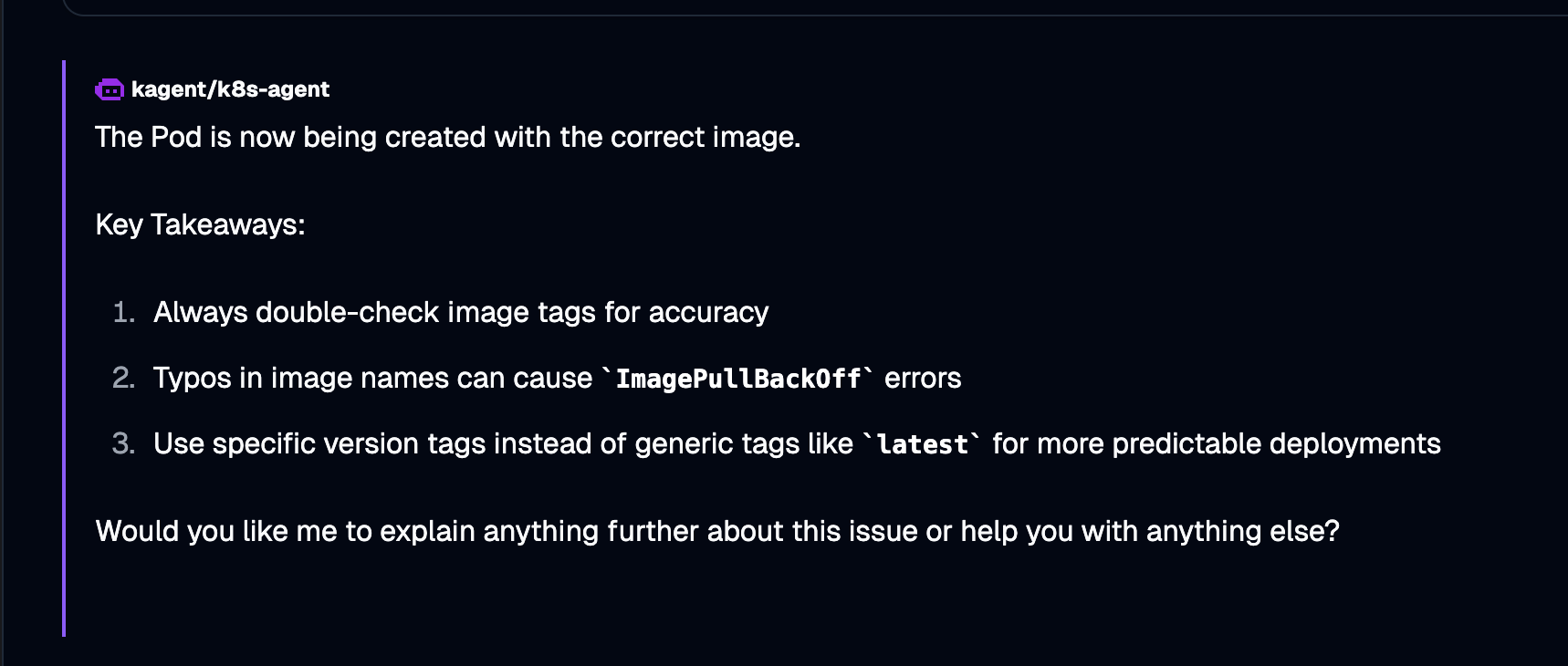

- The output at the end of kagent figuring out the issue is the actual fix. It goes and re-deploys the Pod automatically with the proper container image.

You can confirm that the Nginx Pod is now running.

kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx 1/1 Running 0 53s

Comments ()