Using Claude and LLMs as Your DevOps & Platform Engineering Assistant

Engineering is in constant change. From mainframes to servers to virtualization to cloud and everything in-between (and after). One thing, despite the constant change, always stays the same. Engineers and leadership teams want the ability to move faster, yet stay efficient and performant.

What AI (in reference to LLMs) gives us at this time is exactly that.

In this blog post, you'll learn why you should think about using AI in DevOps and how to jump in with one example, prompting Terraform configuration.

Why Should We Use AI in DevOps?

There was a time where the only way to actually write code was to write your own editor or write it on a piece of paper with a pen. There was also a time when you had to build your own garbage collection (memory management) and compilers. Now, we have high-level programming languages with built-in garbage collection. We can write code in fancy editors that have plugins that do everything from make us more effective (ahem, git blame anyone?) and we can even change the color of our IDE sparkle sparkle.

Throughout time, things in engineering have changed drastically and in a very short amount of time (software engineering is only 65 years old... that's 1 person ago). That's innovation and moving the needle; it's what we need as a society.

"AI" (we need to come up with a better name for it)/LLMs are giving us the next path forward. Now, you can simply ask (prompt) an LLM to write some code and it'll do it. It may not be 100% right or exactly how you want it, but that's okay because your job as an engineer is to design, architect, update, and fix it up in the way that you're hoping it'll work.

This is ultimately a great level of innovation because it allows us to move faster. We can use Claude Code, Gemini within Cursor/GitHub Copilot, or any other method of using an LLM to generate us a template of what we want to deploy. We now get to move that part of the acquisition to the "low-hanging fruit" category. Think about it; do you really need to write yet another unit test or Terraform resource block? You can, and if you don't know how to do either, you should do it manually to learn. However, if you're an experienced engineer in these areas, you don't have to.

A great example of this is when Windows Sysadmins moved from just a GUI via Server Manager to PowerShell. It got to the point where everyone said, "ya know, I really don't have to click the "next" button again for the millionth time. Let me automate this".

Why You Should Trust but Verify

In my consulting practice, I have clients that are making very heavy use in the LLM/AI realm. I also have clients who have made a mandate to not use it. For the clients I'm using AI with, what I can confidently say is it's speeding up certain aspects of the job (i.e, writing a Terraform Resource block), but it creates a lot more work. A great example of this is the hallucinations. I've had LLMs hallucinate so much about bugs, API endpoints that apparently exist but don't, and many other factors. It's to the point where you need to trust but verify. It's great as an assistant, but we're not going to be drinking mojitos, kicking back on the beach, and collecting a paycheck anytime soon.

Choosing Your Tool

After you drink the AI Kool-Aid and convince yourself to start using it, you'll need to think about the tool you want to use. There's a very important distinction to the tooling.

You have:

- The LLM you're going to use.

- The interaction point to the LLM.

As an example, the LLM you may decide to use could be Claude-4-sonnet and the interaction point (tool) you decide to use to interact with Claude-4-sonnet could be GitHub Copilot.

Currently, if you're looking for an IDE experience to interact with an LLM, Cursor and GitHub Copilot appear to be the standards.

If you're looking for a tool to interact with Claude Models via the CLI, there are Claude Code, Goose, and Aider. What's really great about Goose and Aider is that you can use them to interact with LLMs that aren't just Claude. For example, you can use them to interact with Gemini and GPT.

Prompting in Cursor

In the previous section, you learned about some tool choices. In this section, you'll dive into one of many options when it comes to tools and interaction points - Cursor and Claude.

If you'd like to follow along in this blog post from a hands-on perspective, you can download Cursor here.

The project that you'll build in this blog post is a Terraform Module to create Azure Virtual Machines (VM). If you don't use Azure and you use another cloud, you can still follow 99% of this blog post. Just replace anything that's Azure-related with the cloud you're using.

For example, the prompt below (you'll see in step 3) says to create an Azure VM. If you're on AWS, change that to "AWS EC2 instance"

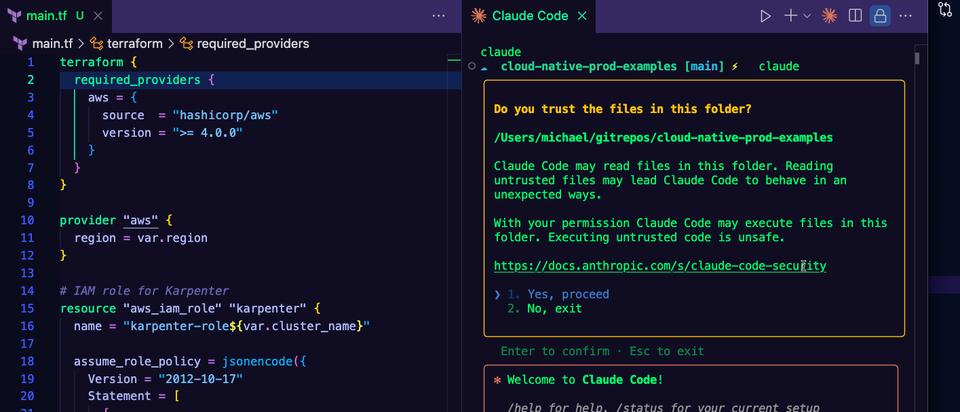

- Open up Cursor after you download it.

You'll see that it looks VERY similar to VS Code, and that's because it's a fork of VS Code.

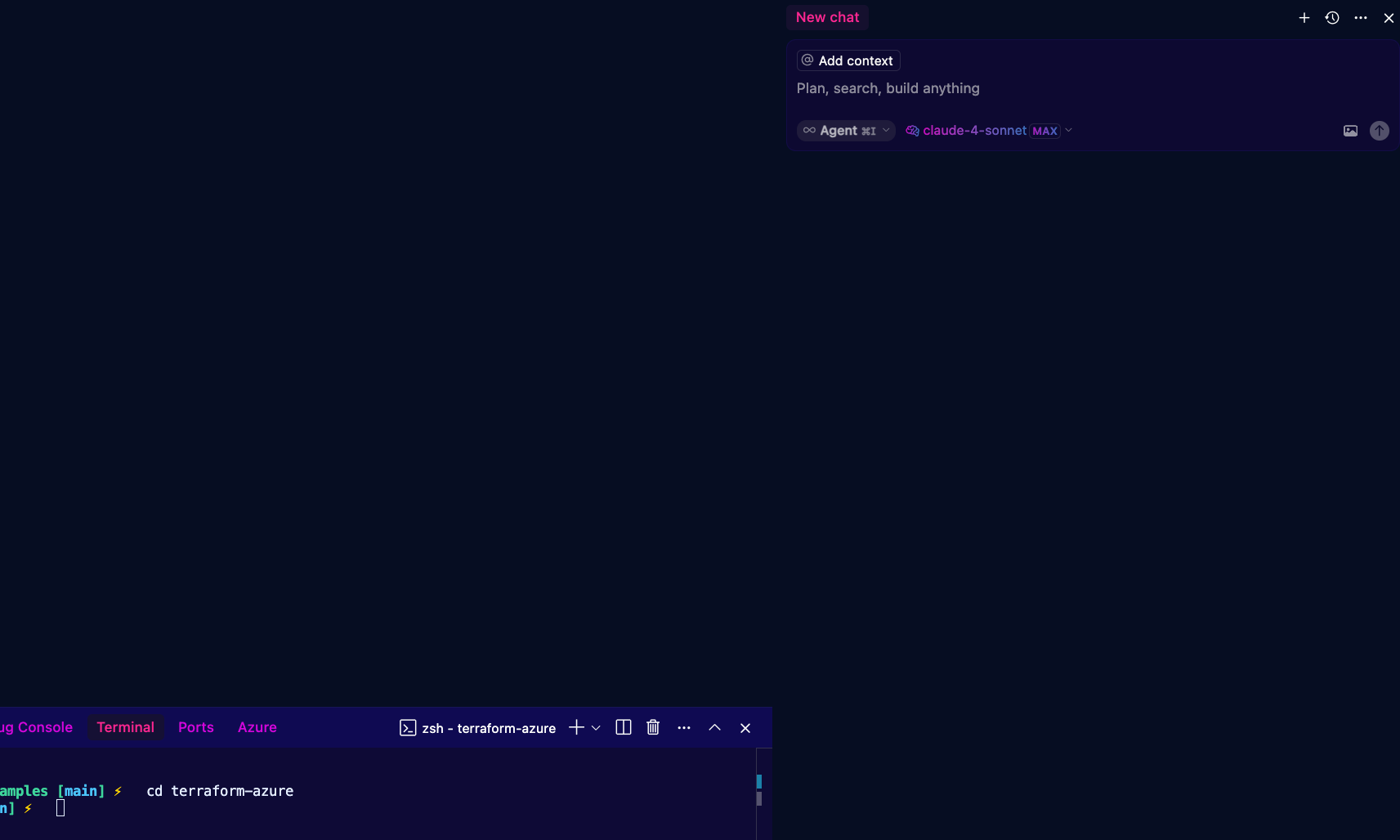

- On the right side, you'll see a chat window. This chat window is where you specify if you want to use an Agent to perform some work or the "ask" feature for a chatbot/ask a question experience.

For the purposes of this blog post, you'll use Agent mode and choose Claude-4-sonnet as your LLM.

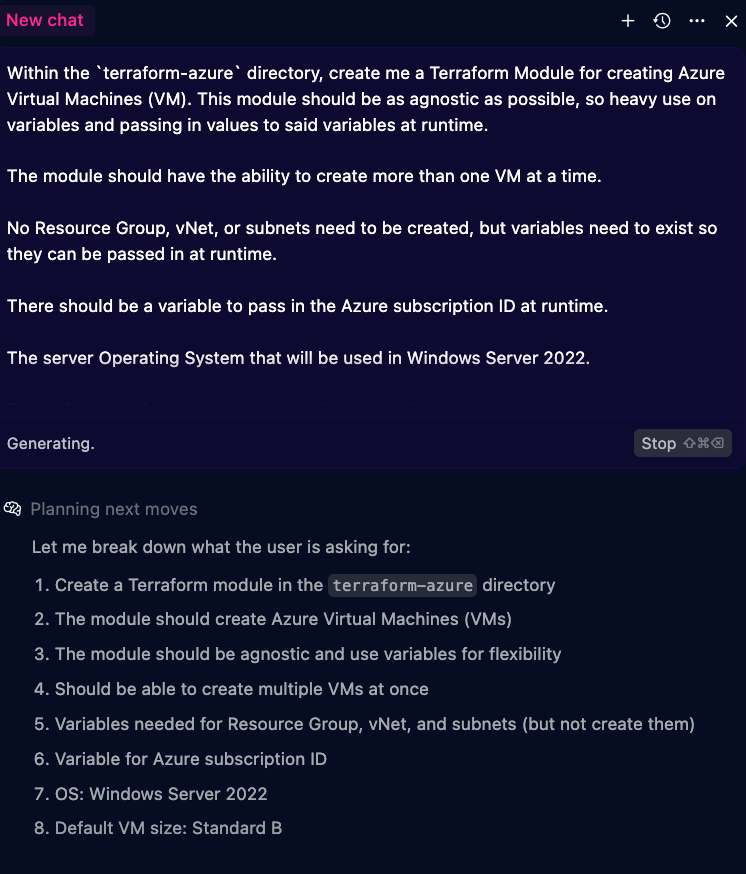

- When using LLM/AI tooling, you'll use something called a "prompt", which is really just a description of what you want to create. The key with a prompt is to be as specific from an architecture and design perspective as possible. As of right now, the output from an LLM is only as good as the detailed prompt.

Use the following prompt:

Within the `terraform-azure` directory, create me a Terraform Module for creating Azure Virtual Machines (VM). This module should be as agnostic as possible, so heavy use on variables and passing in values to said variables at runtime.

The module should have the ability to create more than one VM at a time.

No Resource Group, vNet, or subnets need to be created, but variables need to exist so they can be passed in at runtime.

There should be a variable to pass in the Azure subscription ID at runtime.

The server Operating System that will be used in Windows Server 2022.

The default size for the VM should be Standard B2.

A Storage Account called `adtfstate` will be where the Terraform State is stored, so that should be in the backend configuration.- You'll begin to see AI working. Look at what the output is, don't just accept everything it tells you (actually look at the output and what it's creating), and look through the code to ensure that it's what you're expecting. Remember - trust but verify.

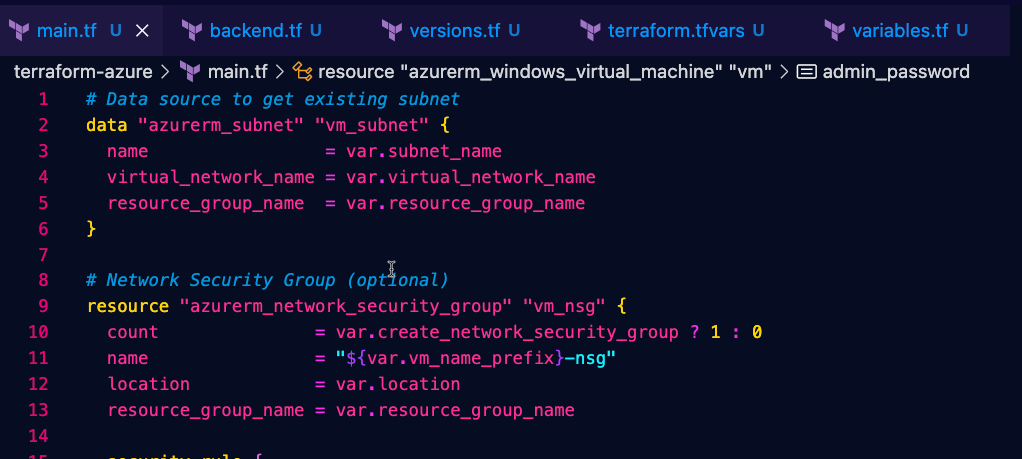

You should have an output similar to the one below.

You'll have to, of course, go through the code (trust but verify) to ensure that it was everything you were hoping for. For example, I saw a few things that I wanted to change based on the specifics of the environment and some things I didn't like (too many outputs).

That's the goal of using an LLM; to help you generate a template and with that template, you can go in and make it yours. It's just templating at a very fast pace.

Getting Programmatic

You may have an IDE that has some LLM capabilities built in, but what about if you want to programmatically interact with an LLM? Luckily, there are several SDKs available.

From an Anthropic perspective, there's"

- The Anthropic SDK: https://github.com/anthropics/anthropic-sdk-python

- The Claude Code SDK: https://github.com/anthropics/claude-code-sdk-python/

For this section, we'll use the Claude Code SDK.

To follow along with this section, you'll need to:

- Have Python installed.

- Create a free Anthropic account on the console: https://console.anthropic.com/.

- Generate an API key from the console.

- Set the Anthropic API key as an environment variable. You can either do that in your:

~/.zshrcfile~/.bashrcfile- On the terminal

The value you'll want to set is: export ANTHROPIC_API_KEY="YOUR_API_KEY"

- Create a directory called

claude - Create a new Python virtual environment (this will create a virtual environment based on your default Python3 version).

python3 -m venv claude- Activate the virtual environment.

source claude/bin/activate- Create a

requirements.txtfile within theclaudedirectory and add the following:

claude-code-sdk

typing_extensions

anyio- Now it's time to set up a quick script to interact with the SDK.

The first step is to set the imports.

from claude_code_sdk import query, ClaudeCodeOptions

from pathlib import Path

import anyioNext, specify a function. The async keyword in front of the main function will allow for asynchronous functionality for concurrency.

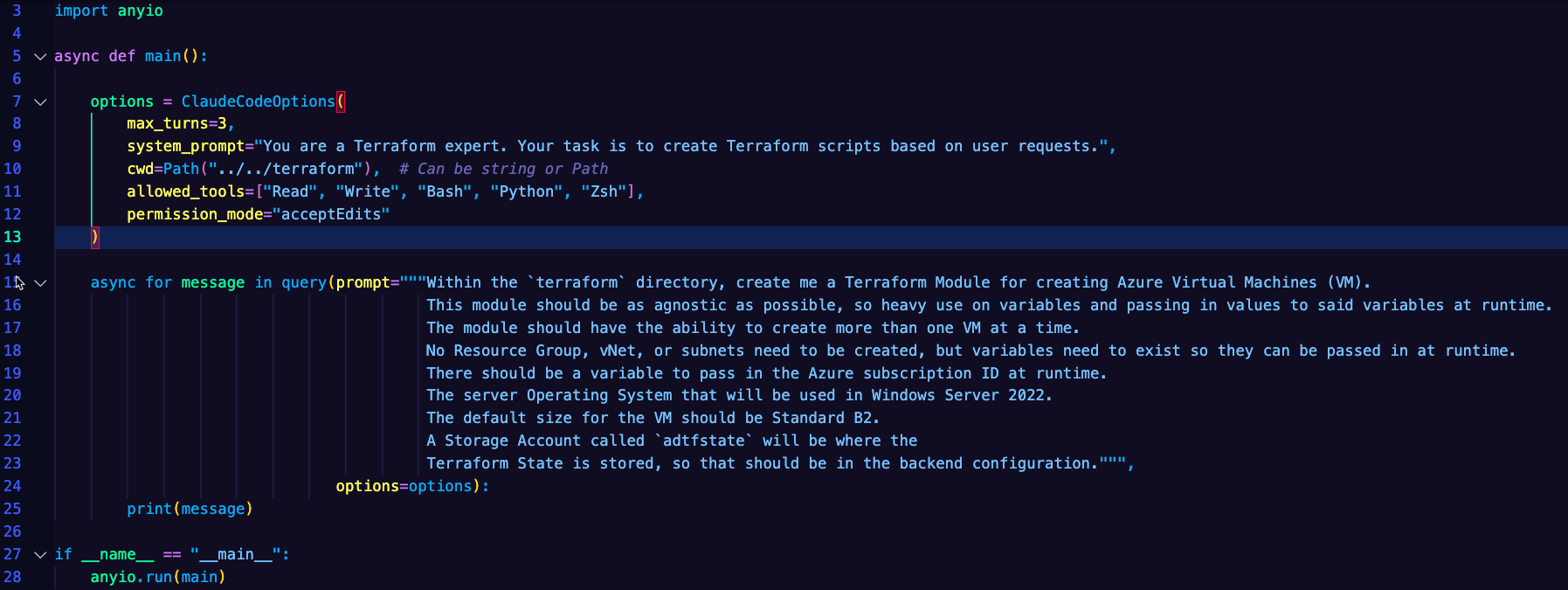

async def main():Within the function, you'll want to set up ClaudeCodeOptions which allows you to specify parameters regarding permissions, prompts, the path where the generated code should be set, and a few others. You can see all of the options within the local Claude Code SDK library under claude_code_sdk/types.py.

options = ClaudeCodeOptions(

max_turns=3,

system_prompt="You are a Terraform expert. Your task is to create Terraform scripts based on user requests.",

cwd=Path("../../terraform"), # Can be string or Path

allowed_tools=["Read", "Write", "Bash", "Python", "Zsh"],

permission_mode="acceptEdits"

)The final step before running the code is to specify the prompt.

async for message in query(prompt="""Within the `terraform` directory, create me a Terraform Module for creating Azure Virtual Machines (VM).

This module should be as agnostic as possible, so heavy use on variables and passing in values to said variables at runtime.

The module should have the ability to create more than one VM at a time.

No Resource Group, vNet, or subnets need to be created, but variables need to exist so they can be passed in at runtime.

There should be a variable to pass in the Azure subscription ID at runtime.

The server Operating System that will be used in Windows Server 2022.

The default size for the VM should be Standard B2.

A Storage Account called `adtfstate` will be where the

Terraform State is stored, so that should be in the backend configuration.""",

options=options):

print(message)Putting the code together, it'll look like the below:

import anyio

async def main():

options = ClaudeCodeOptions(

max_turns=3,

system_prompt="You are a Terraform expert. Your task is to create Terraform scripts based on user requests.",

cwd=Path("../../terraform"), # Can be string or Path

allowed_tools=["Read", "Write", "Bash", "Python", "Zsh"],

permission_mode="acceptEdits"

)

async for message in query(prompt="""Within the `terraform` directory, create me a Terraform Module for creating Azure Virtual Machines (VM).

This module should be as agnostic as possible, so heavy use on variables and passing in values to said variables at runtime.

The module should have the ability to create more than one VM at a time.

No Resource Group, vNet, or subnets need to be created, but variables need to exist so they can be passed in at runtime.

There should be a variable to pass in the Azure subscription ID at runtime.

The server Operating System that will be used in Windows Server 2022.

The default size for the VM should be Standard B2.

A Storage Account called `adtfstate` will be where the

Terraform State is stored, so that should be in the backend configuration.""",

options=options):

print(message)

if __name__ == "__main__":

anyio.run(main)

- Run the code with:

python main.pyClosing Thoughts

As you can see, even with LLMs/AI, there is still a lot of work to do. This isn't a case of "sit back, relax, and let the robots do your book". As a DevOps Engineer, Platform Engineer, and Software Engineer, you'll have plenty of interesting and intriguing pieces of work to implement.

Thanks for reading!

Comments ()